I am extremely hyped about the git integration in Rasa X.

- Alan Nichol (@alanmnichol) January 16, 2020

Just because you're building a product that uses ML doesn't mean you should give up good software development:

- write tests!

- use CI/CD!

- use branches! https://t.co/PBc8hppOQF

Rasa is for building mission critical conversational AI. So when we prioritize our roadmap, we focus on the product teams who use Rasa to ship high-performance, high-availability assistants that play an essential role in their business. An AI assistant is a product, and just because that product uses machine learning doesn't mean you should give up on good software engineering habits.

Continuous Integration

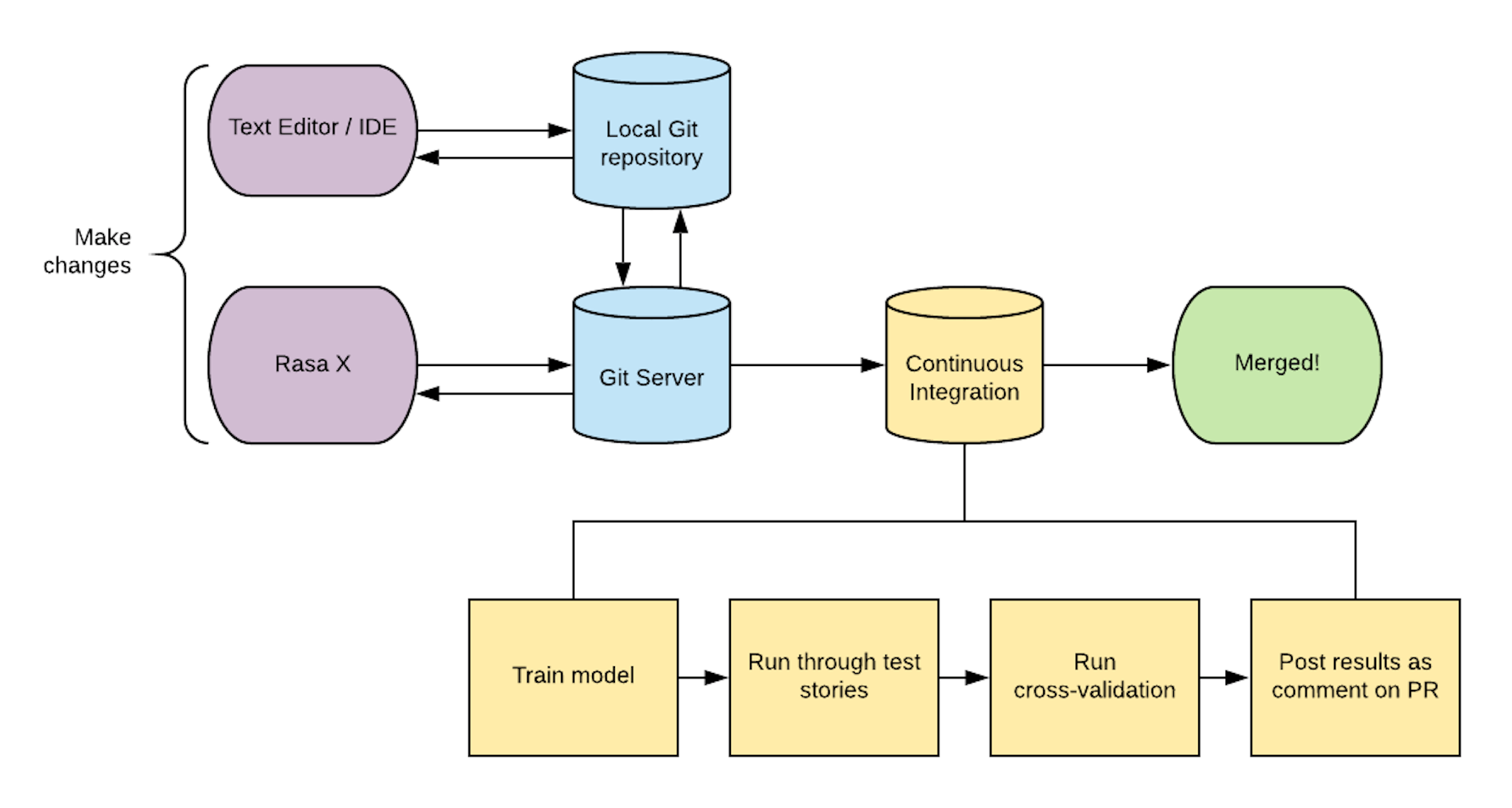

What are the habits great product teams use? Git branches and Continuous Integration (CI), two techniques for making sure you ship reliable software. Here's the usual workflow:

- Create a new branch and add your changes there (for example, a bunch of training data you've just annotated).

- Create a pull request, proposing to merge your branch into another one

- Tests get run, checking that your changes don't break anything

- Someone else reviews your changes

- Once everyone is happy, the branch gets merged.

We made sure that Rasa X fits into this workflow so that you can build an AI assistant this way too.

Test before merging

"Testing" can mean different things, so let's be explicit. When we change our code or training data, we want to answer two questions: (1) do all the most important conversations still work correctly? and (2) did my changes improve my model's ability to generalise to messages and conversations it hasn't seen?

Here is the GitHub action I have set up for Carbon bot which checks both of these things. First, it runs through some end-to-end stories to answer (1). Then to answer (2), it runs cross-validation to check I haven't made the model worse and comments on the PR with the results.

In machine learning there is also the concept of a "test set" (unrelated to software testing) where you hold back some of your data to estimate how well your model generalizes. Cross-validation serves the same purpose without us having to worry about keeping an isolated test set.

In production, you only care about getting the right answer. It doesn't matter if that answer was memorized or predicted, there are no bonus points.

Building on top of Git

Version control in Rasa X is built on top of Git. This means that developers can use any of the git-based tools they know and love to automate their workflow. Jenkins, GitHub Actions, Travis CI, Kubernetes, Helm, you name it!

If you want to see all of this in action, sign up for our Integrated Version Control webinar on Jan 29th

I'm thankful that we can look to our community for inspiration, like the Dialogue team who push the boundaries of Rasa on Kubernetes. They spin up a deployment for every pull request, and can test that version of their assistant by messaging /pr-number to their production assistant. So every fix and new feature can be tested without affecting other developers. Not all teams will need that, but we want to make it easy to build these custom workflows with our products.

What's next

We'll be taking this much further, making it easy to fully automate your ops and deployment. Some of the things we're thinking about including:

- Continuous deployment via GitOps and Kubernetes

- Automatically triggering a hyperparameter search and creating a PR with an updated model

config.yml - Anything else you'd like to see? Let us know